Data Center

Adjust thresholds and understand the impact on Data Center performance, identify opportunities to refresh server infrastructure, understand when and where redundancy is required to reduce the need to duplicate infrastructure

A data center can consume 10 to 100 times more energy per square foot than the average office building and, in most data centers, at least as much energy is used to keep the building cool as is used to operate the servers it contains. Stanford University estimates that data centers currently use between 1 and 1.5% of all the electricity generated on the planet. The energy performance of data centers is, therefore, increasingly a focus of green initiatives.

In many cases, data center managers do not have comprehensive tools for monitoring and controlling energy use in the data centers. As a result, there is a lack of systematic analysis of energy strategies to determine which will yield the greatest benefits, or pose the greatest risk, to ongoing operations. This makes decision making difficult, and energy management is often based on guesswork and ad-hoc strategies. When data for energy use is available, the biggest energy consumers can be identified. It then becomes IT department handles the installation of servers and other equipment, runs the application software and lends support to the users. The facilities department looks after the real estate, the building and its maintenance, and allocates space for electrical equipment and offices. It is unusual that any one person has overall responsibility for the efficiency of the data center, and the way in which IT and building systems interact with each other to consume electricity.

A new approach, called data center infrastructure managed services (DCIM), brings together information technology (IT) and facility management to centralise monitoring, management and intelligent capacity planning for all a data center’s critical systems. The DCIM approach can help data possible to develop a plan to reach energy efficiency goals, to implement a plan and document its progress.

Integrated Data Center Management

Data Center Management is a multi-speciality discipline. The data center manager has to maintain the data, ensure that the operations and facility run smoothly and understand the needs of the business and its workforce 24/7 on all 365 days of the year.

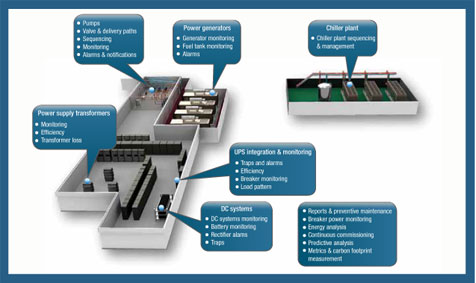

As well as IT systems, data centers generally include redundant or backup power supplies, redundant data communications connections, environmental controls (e.g. air conditioning and fire suppression) and security devices. In many organisations, it is still common to find that running the data center is split between two separate departments. The center managers identify and eliminate sources of risk and increase the availability of critical IT systems. Data center owners can reduce their costs by detecting unused servers and turning them off, by virtualising servers while monitoring central processor units (CPU) for overloading, automatically switching to low power modes at night and by delaying upgrades to uninterruptible power supply (UPS) and backup generator sets until it is really necessary.

Effective data center operation requires a balanced investment in both the facility and the equipment it houses. Scalability and flexibility are achieved by integrating building and computer engineering to standardise the way servers are installed and by designing the installation in modular units that include both servers and their support systems. Modularity has the benefits of providing scalability and making it easier to adjust the size of the system to cope with fluctuations in demand. The use of dedicated centralised systems requires more accurate forecasts of future needs to prevent expensive over construction or, perhaps worse, under construction that fails to meet future needs. If the data center is planned in repetitive building blocks of equipment, and associated power and support equipment (air conditioning in particular), then these can be added and removed as required.

Virtualisation is Risky without Improved Management

Server virtualisation is a popular method of increasing a data center’s application capacity to accommodate the rapid growth of business-critical IT applications, without making additional investment in physical infrastructure. Server virtualisation also enables rapid provisioning of applications, as multiple applications can be supported by a single provisioned server. Change management is key to its success.

Data center monitoring and management systems have evolved from the days when they were focused on server availability and alarms. All now capture a range of real-time data within a proprietary interface, but many lack the functionality needed to monitor the facility systems and to adjust these interdependent systems to address changing business and technology needs.

The problems caused by the disconnection between the facility and the IT infrastructure are aggravated by virtualisation. It creates a dynamic environment within a static environment, where rapid changes in computing load translate to increased power consumption and a need for efficient heat dispersal. Rapid increases in heat densities can place additional stress on the data center’s physical infrastructure, resulting in a lack of efficiency, as well as an increased risk of overloading and outages. In addition to increasing risks to availability, inefficient allocation of virtualized applications can increase power consumption and concentrate heat densities, causing unanticipated ‘hotspots’ in server racks and other areas. The need to manage these risks has resulted in demand for integrated monitoring and management solutions capable of bridging the gap between IT and facilities systems.

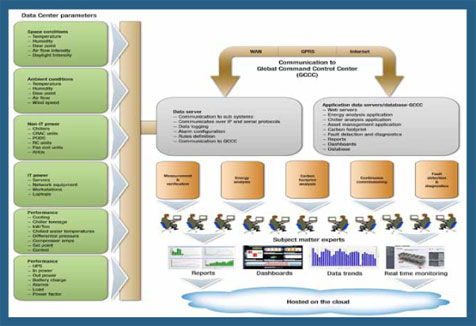

Pacific Controls Managed Services for DCIM

Pacific Controls Managed Services for Critical Assets Monitoring provide a holistic view of the facility’s data center infrastructure. Pacific Controls specialised software, hardware and sensors link the data center to the Galaxy platform which acts as a single common, real-time monitoring and management platform for all interdependent systems across both the IT and facility infrastructure. Pacific Controls managed services can be used to identify interdependencies between facility and IT infrastructures to alert the facility manager to gaps in system redundancy, and to provide dynamic, holistic benchmarks on power consumption and efficiency to measure the effectiveness of ‘Green IT’ initiatives.

In addition to real-time monitoring, these tools include modelling and management functionality to aid long-term capacity planning; they also allow dynamic optimisation of critical systems performance and efficiency to ensure efficient asset utilisation. Using Pacific Controls managed services to support DCIM makes intelligent capacity planning possible, through the synchronised monitoring and management of both physical and virtual infrastructure. It allows the aggregation and correlation of real-time data from heterogeneous infrastructure to provide data center managers with a single centralised repository of performance and resource usage information at the GCCC. Data center managers can use Gbots™ to automate the management of IT applications based on both server capacity and conditions within the data center’s physical infrastructure. This will optimise the performance, reliability and efficiency of the entire data center infrastructure, increase energy efficiency and reduce overall energy use.

DCIM enables data center managers to measure energy use in real time and, by managing the server’s energy consumption and controlling the heat generated, enables safe operation at higher densities. DCIM can lead to energy savings that reduce a data center’s total operating expenses.

CFD Aids Data Center Design and Management

In addition to measuring and controlling energy use, Pacific Controls managed services use Computational Fluid Dynamics (CFD) to create a virtual model of the airflow in the facility and to find ways to maximise the cooling effect, which further drives down cooling costs. CFD provides companies with a detailed 3-D analysis of how cold air is moving through a data center, identifying potential hotspots where equipment is receiving too little airflow. Thermal mapping can also locate areas in a data center that are receiving more cold air than is needed. In addition, the tool can identify places where cold air is being wasted or is mixing with hot air, reducing the effectiveness of the overall cooling and wasting energy. Proposed green initiatives can be tested in a virtual environment. Current power consumption can be benchmarked using the data from real-time feeds and equipment ratings, then the Galaxy platform can be used to model the effects of the proposed initiatives on the data center’s power usage efficiency (PUE) and data center infrastructure efficiency before committing resources to an implementation. Using this approach, servers can be placed in the optimal position with regard to power, cooling and space requirements.

Data Center KPI Definition and Monitoring

There are a number of different KPI that can be used to measure the energy efficiency of a data center, all of which are calculated by Pacific Controls Galaxy Platform:

- Data center infrastructure efficiency (DCIE) is calculated by dividing the ICT equipment power by the total facility power and is used by regulators in the European Union (EU).

- Power usage effectiveness (PUE) is the reciprocal of DCIE, the total facility power divided by the ICT equipment power and is used by regulators in the US.

- Data center energy productivity (DCeP) is defined as the useful work produced in a data center divided by the total energy consumed to produce that work.

- Bits per kWh uses the outbound data streams as the measure of useful work.

- Weighted CPU Utilisation.

- Operating System Workload Efficiency.

In order to take into account more variables, Pacific Controls Managed Services for Critical Assets Monitoring capture overall efficiency in matrices that measure the utilisation of various assets throughout the data center. These matrices can include utilisation values for:

- Data center floor space

- Rack space

- Cooling capacity

- Power capacity

- CPU and network utilisation (time averaged)

- Storage utilisation.

Other KPI generated by default in the Pacific Controls managed service include:

- Watts per square foot or square metre

- Power distribution: UPS efficiency, IT power supply efficiency

- Uptime: IT Hardware Power Overhead Multiplier (ITac/ITdc)

- Heating ventilation and air conditioning (HVAC)

- IT total/HVAC total

- Fan watts/cfm

- Pump watts/gpm

- Chiller plant (or chiller/overall HVAC) kW/ton

- Lighting watts per square foot or square metre

- Rack cooling index (fraction of IT within recommended temperature range)

- Return temperature index (RAT-SAT)/ITrT.

Benefits for Data Center Equipment Suppliers

Using Pacific Controls Managed Services for Critical Assets Monitoring data center equipment manufacturers can offer their customers monitoring and management services through a single interface that connects all the equipment they have deployed.

They can offer predictive maintenance services to ensure that all the equipment in a data center is operating reliably, with little or no downtime. They can also offer energy management services, to reduce the carbon footprint of the data center. They can offer intelligent capacity planning services for clients who wish to extend their infrastructure. This allows them to have an on-going relationship with data center operators and provides a central data depository for analysis that will enhance R&D.

Benefits for Data Center Owners

Data center owners can understand and track thresholds at which action must be taken for variables such as CPU usage, temperature and humidity. They can manage loadings to ensure data center infrastructure is used as efficiently as possible and forecast future consumption. This means that capacity can be planned and equipment and other supplies procured in good time, rather than having to increase capacity at short notice to handle peaks in loading. The industry is finding it difficult to find data center professionals as the tasks associated with management of a data center have become more varied and demanding. Presently, there are many job vacancies in data centers and recruitment can take up to six months. In many cases, using Pacific Controls Managed Services for Critical Assets Monitoring and its subject matter experts (SMEs) at the GCCC, can reduce the workload on staff and the number of qualified managers needed.

The analytical tools allow data center operators to manage change effectively. They can create ‘what-if’ scenario models to evaluate alternative strategies. These allow them to:

- Adjust thresholds and understand the impact on data center performance

- Identify opportunities to refresh server infrastructure

- Understand when and where redundancy is required to reduce the need to duplicate infrastructure. Data center owners can reduce their energy consumption and GHG emissions and meet corporate and government sustainability goals.